PRODOTTI IN EVIDENZA

INTEGRAZIONI DI PRODOTTO

GUARDA COME FUNZIONA

Come proteggere i dati ovunque

Scopri come funziona il nostro software di rilevamento e risposta ai dati

HAI ALTRI DUBBI?

Parla con un espertoRichiedi una valutazione gratuita del livello di rischio dei datiLA NOSTRA PIATTAFORMA

EL PROBLEMA CHE RISOLVIAMO

IN QUALI SETTORI OPERIAMO

TESTIMONIANZE DEI CLIENTI

Perché la finanza è il bersaglio principale degli attacchi IA

Identifica la precisione e la creazione di report trasparenti

HAI ALTRI DUBBI?

Richiedi una valutazione gratuita del livello di rischio dei datiParla con un espertoGUIDA

Guida pratica alla prevenzione della perdita di dati per dirigenti

Consulta la guida

GUIDA

Forcepoint AI Mesh

Scopri AI Mesh

REPORT DI ANALISI

Gartner: 2025 Market Guide for Data Security Posture Management

Leggi il report

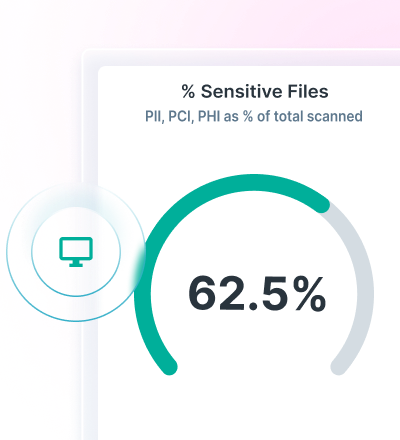

RISK ASSESSMENT

Richiedi una valutazione gratuita del livello di rischio dei dati

Affidati a noi

PERCHÉ SCEGLIERE FORCEPOINT

CONFRONTO CON LA CONCORRENZA