Risk Mitigation is the Real First-Mover Advantage of AI

Forcepoint's 2024 Future Insights Post #2

0 min read

John Holmes

Brice Cagle

When people think about the first-mover advantage of artificial intelligence, they’re likely drawn to ChatGPT – the chatbot that famously kickstarted the fervent adoption of generative AI. But there are hundreds of first-mover names that probably aren’t on your radar, like Goldman Sachs, Verizon, or Samsung.

Their first-mover advantage doesn’t come from adopting AI. It comes from restricting it. Or, more aptly put, from taking a measured approach to adoption – one that ensures the appropriate protection of company information and personal data.

When the masses were rushing to incorporate AI, Goldman Sachs, Verizon, and Samsung were quick to prohibit it. In fact, many companies have at one point prevented their employees from using generative AI because of the risk it poses to sensitive and confidential information.

As the availability of AI tools continues to grow and the presence of AI becomes more embedded in our industries, the safe and measured adoption of it will become a critical factor in its long-term success and for companies to potentially benefit.

Ultimately, risk mitigation will pay dividends for companies that take the time to define, implement and enforce AI policies.

You can’t put the genie back in the bottle

Once data is out in the AI world, there’s no getting it back.

Private AI, which uses data lakes that you maintain full control over, is the safest route to implementing it. Think BloombergGPT, but for your business.

However, building private AI tools is cost-prohibitive for many organizations, leaving the vast majority to rely on public AI tools like ChatGPT, Bard or any of the other applications you’ll come across. And public AI solutions interact with data like the “Hungry Hungry Hippo” board game. Once information is introduced to it, it ingests that data and trains from it.

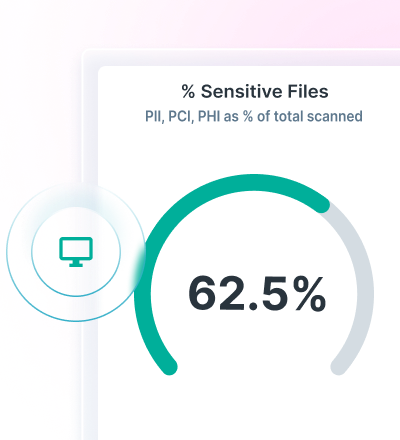

That data no longer only belongs to you. AI won’t return your financial statements, internal memos, or software code, and you can’t turn back the clock to prevent employees from leaking personally identifiable information (PII) or intellectual property. That’s why the goal of incorporating AI should be focused on preventing the improper introduction of sensitive information to the great unknown of public generative AI.

There’s no question that success will be difficult. Defining exfiltration within the context of AI is no easy task, especially since it potentially covers everything from a single prompt to something as major as a full-on breach.

At a minimum, there are mitigation measures that should be implemented when using public generative AI. Those measures will be supplier dependent but include aspects like improving security from varied deployment models, opting out of the tool’s ability to learn from content, or purchasing premium offerings for higher levels of protection.

AI security policies are essential to ensuring business’ competitive survival

Confidentiality policies and agreements are status quo for protecting intellectual property. Now, these policies must extend to the workforce’s interactions with AI.

In fact, we predict that AI security policies will become as essential to defending a business’ competitiveness as confidentiality policies are today.

AI creates an enhanced need for security and framework. With AI, the impact of data entering the wild is exponential because the pace of data loss can be so quick, and the volume can be massive.

The goal should be knowing exactly which applications employees use and what data they are interacting with to prevent AI from undermining the proprietary right of your content. And, by extension, to preserve sensitive information like PII.

At Forcepoint, for instance, our AI policies are mostly concerned with acceptable use. We pay special attention to the way technologies intersect with our supply chain and data – similar to our open-source code approach – to ensure that usage doesn’t invite unnecessary risk.

Since all areas of business will be impacted by AI and its associated risks, a successful AI governance program needs to:

- Define secure use of AI applications and processes

- Ensure cross-functional resource investments among business units

- Show dedication to safe onboarding and deployment across departments and divisions

Safely unlock the potential of AI

Setting a framework for the acceptable use of AI is an important first step, but maintaining true data security is a marathon.

If you have concerns about the security of your intellectual property and PII with AI, you’ll want to ensure you can enforce AI policies across the organization. Using Forcepoint Data Loss Prevention and Forcepoint ONE, organizations can write policies once and extend them across the organization, ensuring intellectual property remains secure everywhere it goes.

John Holmes

Read more articles by John HolmesJohn D. Holmes is Chief Legal Officer and Corporate Secretary at Forcepoint. As Chief Legal Officer, John leads the company’s legal and regulatory affairs, intellectual property creation and protection, litigation, M&A, ethics, and compliance programs. He is also primary advisor to the Forcepoint Board of Directors on cybersecurity law, privacy, and associated regulatory compliance matters.

John started his legal career in private practice with Thompson & Knight, before embarking on his corporate career with Motorola, followed by Freescale Semiconductor where he served as Vice President, Legal and Government Affairs, until the company’s acquisition by NXP in 2015.

John earned a Bachelor of Arts degree in History and Economics from Tulane University and his Juris Doctorate degree from Tulane University School of Law.

Brice Cagle

Read more articles by Brice CagleBrice Cagle serves as Sr. Counsel and Data Protection Officer for G2CI.

- 2024 Future Insights Series

In the Article

2024 Future Insights SeriesRead the Series

2024 Future Insights SeriesRead the Series

X-Labs

Get insight, analysis & news straight to your inbox

To the Point

Cybersecurity

A Podcast covering latest trends and topics in the world of cybersecurity

Listen Now